Closed captions in audio and video calls

Audio and video calls are a great way for your organization to communicate with customers. However, they can also have drawbacks:

-

It can be difficult to have a conversation when the participants speak different languages. This issue is exacerbated when the subject is complex and requires specialist vocabulary.

-

People with a hearing impairment may struggle to follow a conversation, especially if the connection is poor.

To make audio and video calls more accessible for all users, Unblu can display closed captions in calls. This provides an additional channel through which participants can follow the conversation. You can also give participants the option to have the closed captions translated in real time.

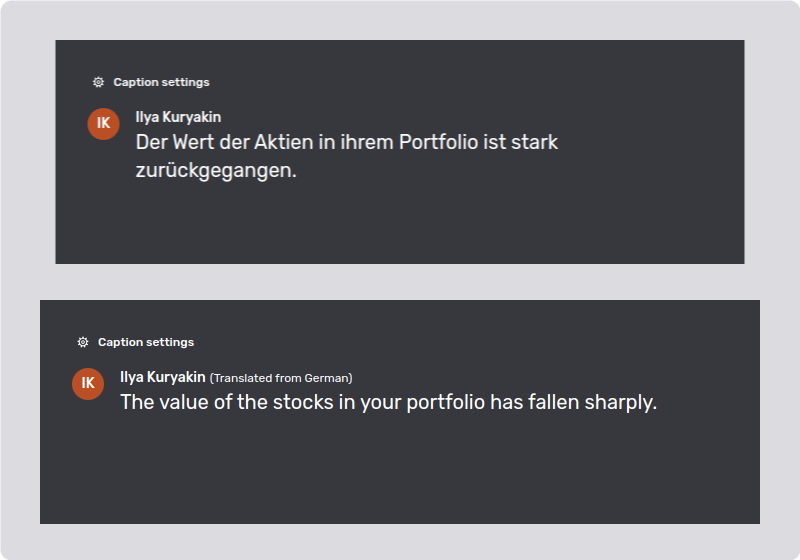

When captions are translated, the original language they’re translated from is shown above the caption.

This is a preview feature. It may be subject to change or removal with no further notice.

To enable preview features, set com.unblu.platform.enablePreview to true.

For more information on preview features, refer to the Unblu release policy.

Closed captions are only available with LiveKit as the call service provider.

Configuration

Closed captions have configuration properties in several scopes.

Closed caption configuration in conversation templates

-

com.unblu.conversation.call.enableCallTranscription: Enable call transcripts. This configuration property is also required for closed captions.

This configuration property requires a valid transcript provider configuration; see com.unblu.translation.translationServiceProvider.

-

com.unblu.conversation.call.enableClosedCaptions: The participants with access to the Closed captions button in the call UI.

-

com.unblu.conversation.call.enableClosedCaptionsTranslation: The participants with access to the closed caption Translate option. The property is only taken into account if com.unblu.conversation.call.enableClosedCaptions is enabled for the participant. Requires a translation provider configuration; see com.unblu.translation.translationServiceProvider.

-

You can specify the default closed caption mode for each participant role.

-

If

OFF, the participant must enable closed captions in the call UI to see them. -

If

ON, the participant sees closed captions in the speaker’s language when they join a call. -

If set to

TRANSLATED, the participant automatically sees the closed captions translated into the language of their Unblu UI.

The configuration properties for the participants' default closed caption mode are:

-

com.unblu.conversation.call.startWithAssignedAgentClosedCaptionMode for the assigned agent

-

com.unblu.conversation.call.startWithSecondaryAgentClosedCaptionMode for secondary agents

-

com.unblu.conversation.call.startWithContextPersonClosedCaptionMode for the context person

-

com.unblu.conversation.call.startWithSecondaryVisitorClosedCaptionMode for secondary visitors

-

LiveKit (ACCOUNT scope)

-

com.unblu.conversation.call.livekit.transcriptServiceProvider: This is the speech-to-text (STT) provider used to create closed captions and call transcripts. The selected provider’s access details are configured separately:

-

For Google, you must have a valid Google service account configuration in the configuration property com.unblu.gcp.serviceAccountKey or Application Default Credentials (ADC), and the service account must have the role Google Speech Client.

-

For Azure, you must provide a valid Azure Speech Services subscription key in com.unblu.azure.speechSubscriptionKey. You must also specify the Azure region where Speech Services are deployed in com.unblu.azure.speechRegion. If necessary, specify the URL of the proxy required for Azure connections in com.unblu.azure.proxyUrl.

If you use a non-standard endpoint URL for Azure Speech Services, specify it in com.unblu.azure.speechEndpoint.

-

-

com.unblu.conversation.call.livekit.micDataSource: The microphone that serves as the data source for LiveKit transcripts.

-

If you select

LIVEKIT_EGRESS, the configured LiveKit server must have egress enabled. Furthermore, you must configure the webhook entry path and allow inbound WebSockets from LiveKit to the Collaboration Server.This setting is best suited for transcripts. The latency of up to 5 seconds makes it less suitable for live closed captions.

-

If you select

BROWSER_MIC_CAPTURE, data is captured from the participants' microphones in the browser and sent directly to the Collaboration Server using Unblu’s real-time framework. This setting is experimental and should only be used for evaluation purposes at present.

-

Translation (ACCOUNT scope)

To enable participants to view translated closed captions, you must configure a translation service provider. Use com.unblu.translation.translationServiceProvider to specify the translation service provider to use when dynamically translating captions into other languages.

Currently, Unblu supports Google Cloud Translation and Azure Text Translation.

If you select GOOGLE_TRANSLATE, you must have a valid Google service account configuration in the configuration property com.unblu.gcp.serviceAccountKey or Application Default Credentials (ADC) with translation enabled. The service account must have the role Google Speech Client.

For more information, refer to Translation service provider.

See also

-

For information on using closed captions in the Unblu UIs, refer to the Closed captions sections of the Floating Visitor UI guide.

-

For information on configuring the translation service provider for closed captions, refer to the Translation service provider section of the article Translating chat messages.